This customer worked in the 3D animation space which leads to 1000’s of files for each frame they render and the need for a 24/7 operation as the renderfarm is always rendering. The original setup was no longer suitable, there were two Direct attached SAS RAID units with 50TB of data that ran into a physical windows file server. As the system aged several problems popped up

- Restarting the server to keep it patched caused a 15-20min outage as the server took a long time to start between long POST times and a suspiciously slow internal RAID

- Long backup times with a manual failover that involved renaming the backup server and changing it’s IPs

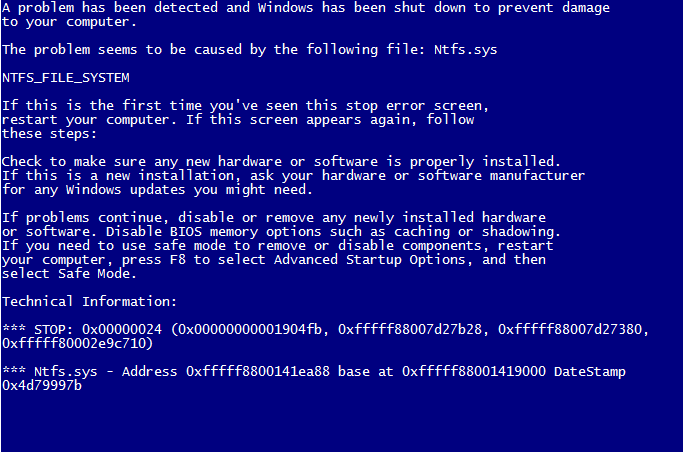

- A file system corruption that caused NTFS.sys to BSOD the server

When you get this on a new build you’re in serious trouble

The first step was to mitigate the NTFS.sys issue as it was happening more and more often until it was every 10min. First a SAS card was added to the virtual host, A new Server 2012R2 virtual machine was built and prepared. We chose Server 2012R2 as chkdsk had some serious improvements over chkdsk in 2008R2. We turned off the old server, updated the SAS mappings on the RAID, and used an RDM Disk and mounted it in our new Server. Server 2012R2 still suffered the same NTFS.sys BSODs but the restart times were now 20 seconds instead of 20min so we were able to limp along until that evening when we scheduled an outage. We were able to repair the disk using chkdsk and buy us enough time to source the new equipment.

The requirements were

- Must be expandable to 200TB+

- 10GbE

- HA / Failover for SMB

- Dual power supplies

- Reliable

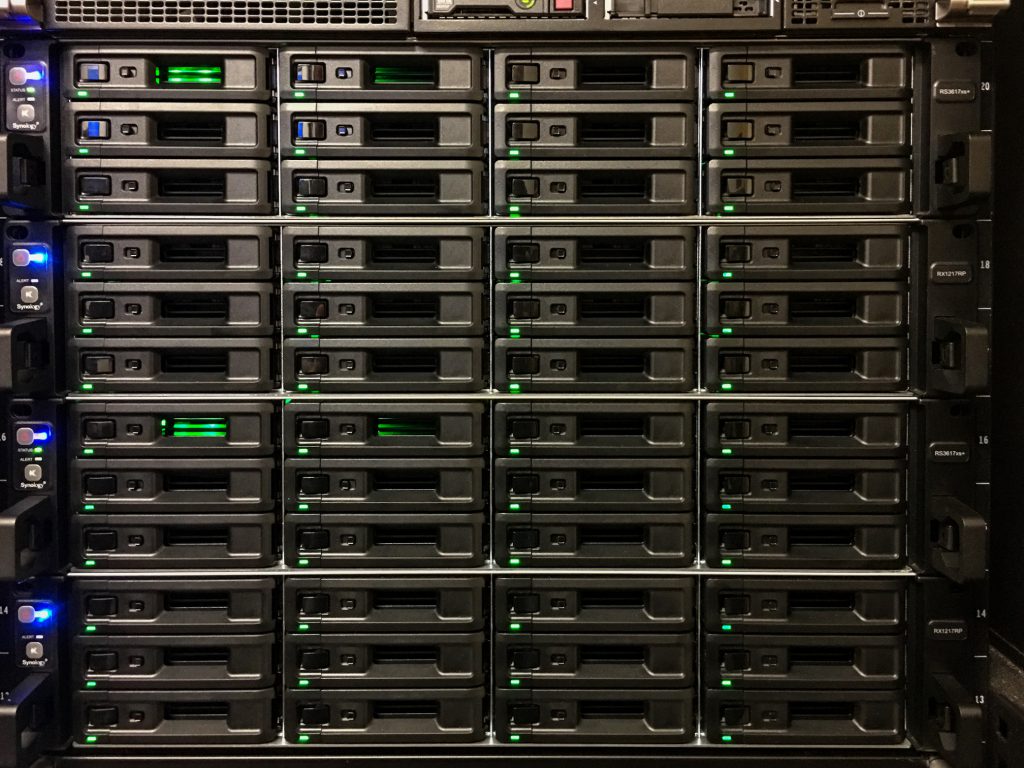

The RC18015xs+ setup |  The RS3617XS+ and RX1217 setup |

After some research we came up with using a simple but effect solution, a NAS. By keeping it simple we take out a few of the complexities around a virtual server with RDM and reduce licensing costs. The final choice was between two Synology units, the RC18015cs+ and the RS3617xs+. The main difference was that the RC18015xs+ uses dual port sas expansion units to share the same drives which means if you lose any expansion unit you won’t be able to failover. Of course that would require total either total power loss to the unit or a hardware failure but none the less the customer opted for two fully independant systems for redundancy plus we already had 32 odd SATA WD Enterprise 6TB drives that were still in warranty to re-using them would save on the CapEx.

The equipment arrived, 1x RS3617xs, 12x 10TB WD Enterprise SATA drives, 2x RS3617xs+, 2x RX1217RP, 2x 16GB RAM expansion, 4x 256GB SSD caches, and 14 WD Enterprise 6TB SATA drives. We kicked off the next stage of the project. We build both units and racked them only putting the disks in the first unit giving us 72TB and 256gb of writable SSD cache. The second 10GbE port on the VM host was removed from redundancy (replaced with a 1gb failover) and connected directly to the first nas (NAS01) and a copy was started directly from the file server. It took just over a week to copy everything over as we had to throttle the copy during business hours as latency increased for staff. During the week we did incremental copies every evening at 9pm until cutover day. Basic test were completed and AD binding + group permissions were set during business hours.

Cutover was a 4 hour period on a Friday night, SMB was disabled on the Windows Server and a differential backup was completed in just an hour, with over 20 million files that was impressive. The IP of the NAS was changed to the production VLAN and group policy mappings were updated. The windows file server was then shutdown and the renderfarm restarted to pickup the new file server settings. The offsite backup NAS (RS3617xs) was configured and cloudsync setup and started syncing, the local server running the backup RAID was also configured to differential copy from NAS01 for the week so files were always in two places. The weekend rendering showed great performance with the SSD read cache having a 80% hit rate. Come Monday aside from a few mapping issues with group policy everything was working perfectly.

After the first week the offsite sync was completed and the drives removed from the backup raid and placed into NAS01 and NAS02. The volume was expanded to the full 22x6TB drives and HA sync setup to NAS02, after another week the setup was fully installed, configured, and working providing redundancy onsite, easily recoverable files via snapshots, and full DR plan with an offsite backup server ready to go with a firewall change (kept restricted from network for security reasons unless DR is needed).

265TB worth of drives